Separating Predictions from Decisions: Model Execution and Decision Engine

Introduction

Before the 1960s, Predictions and Decisions were mixed together in the area of credit granting to varying degrees. “Should an enterprise decide to accept your application for credit?” is a question that requires assessing your creditworthiness which ultimately entails predicting whether you will repay.

Originally, prediction and decision were closely coupled which would typically mean that predicting someone as creditworthy automatically meant agreeing or deciding to grant them credit.

However, creditworthiness does not or should not imply automatic credit approval. As Ted Lewis from Fair, Isaac in the early 1990s observed, “In using traditional methods the credit analysts did not … note … they were answering two questions … what is the risk presented by the applicant, and … what is the maximum risk that the enterprise should accept.” Teasing apart the conceptual distinction between predictions and decisions has been a prominent and successful theme over the last sixty years.

This article traces the history of how the conceptual segregation of prediction and decision has helped to contribute to the independent evolution of predictive model building and of automated decision engines. Once we have identified these two pieces of the puzzle, it is possible to conceptualise an independent model execution and decision engine.

Over time, there has been a development trajectory that alternates between integration and segregation of model implementation, decision process configuration, and the execution of both the model and the decision process.

Today, we have arrived at a standard whereby model execution can be separated from the decision engine. Here we explore some of the pros and cons of that separation and some risks and temptations around having an independent model execution.

A Little Historical Perspective

During the same period, technologies have emerged to help automate both the predicting and deciding sides of these interactions between credit grantors and credit applicants. Today, the state of the art is that predictive models of risk are built with a variety of technologies and then executed at the time of application to quantify risk.

The risk assessment quantity is used by automated business user-configurable decision processes in the decision engine. The decision process represents the business’s appetite for risk and the strategies and tactics implemented to manage it.

The life cycle of predictive models and decision processes are different and have both changed over time. Years ago, it would take months of analysis and testing to develop a robust credit risk model that might serve usefully for several years, perhaps with minor adjustments over time. The life cycle of the predictive models was longer than the life cycle of the decision processes that exploited the prediction because the business user who configured the decision process was frequently and proactively strategising or reactively responding to a changing business environment.

In the 1980s to the early 2000s, the process of analysis to create a model was called model or scorecard building. The output of that process was a scorecard or a scoring formula. The resulting formulas are straightforward and usually comprised of relatively simple logic and arithmetic that could be computed quickly. Integrating the implementation of the scorecard and thus the scoring execution within the decision engine was a sensible and useful approach.

This integration meant that other responsibilities, such as generating new variables from various more primitive data sources could reside in the decision engine. Further, the elaboration of the decision could naturally be represented and derived in the decision engine, for example, going beyond simply generating a response of accept | refer | decline to include details of credit offers (such as facility sizes, rates, terms and conditions perhaps across an array of product options).

These decisions were frequently made in the context of multiple portfolios to address different sub-populations with different product choices. All of this was frequently bound by policy rules reflecting operational, regulatory, or even strategic constraints and all changing over time based on business circumstances.

Later, as the new millennium progressed, artificial intelligence matured into machine learning (and back to artificial intelligence); the role of the data scientist emerged with new and powerful model building and model execution tools readily at hand. The life cycle for model building has become greatly accelerated (or at least promises to), and the scope of the models can be greatly expanded.

The output of model building is often not just a simple formula. Advanced tools allow for more sophisticated feature engineering, enabling the extraction of valuable insights from a wider range of data sources. This can potentially enhance the predictive power of credit risk models by incorporating non-traditional variables.

Model Execution as Model Object with a Run Method Behind an End Point

With the advent of more sophisticated data science tools and techniques, the reality is that a new standard for model implementation has arisen. This involves moving the model execution from within the decision engine to behind a well-managed and scalable endpoint. Each model offers its own endpoint.

Model execution, which previously may have contributed insignificantly to an overall decision engine process execution, might now be quite costly to compute. Here, segregating the model execution behind an endpoint means that the required model execution computational resource can be scaled independently of the decision engine.

Computational Power behind an End Point – a Temptation

Is this segregation of prediction and decision appropriate? What about reconsidering the role of the decision engine once prediction is removed from the decision process? When a decision scientist sees the computational power behind an endpoint, there is a temptation. Taken to the extreme, do we really need a decision engine if we can instead move the decision into the model execution components?

As part of the standard model implementation, for example in Azure model building, there is a scoring or inference script available for various purposes: a score.py module. The model builder can modify this Python module to deal with generated variables or other input variable mapping, for example. It can also be used to support scaling the output of a model execution, typically a probability number.

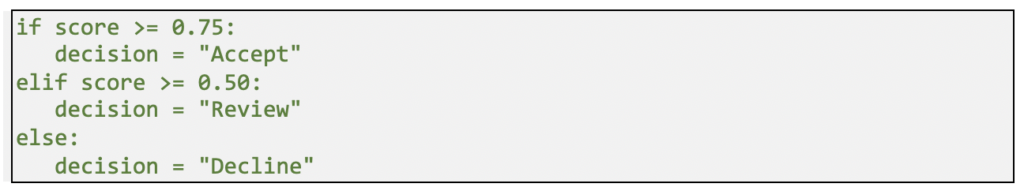

The existence of this relatively simple program also presents another new tempting option to lure the data scientist into a quick & dirty implementation of what is ultimately a truncated decision process. For example, the data scientist can drop some code similar to the following into the script once the score is computed:

A simple decision sample

In some cases, the temptation might even induce the data scientist to code policy rules as part of the implementation above.

A little thought will demonstrate that this approach has many disadvantages. The decision process is more complex and rightly belongs under the control of the credit risk manager, who is responsible for managing the portfolio(s) and has more knowledge and understanding of credit policy.

Defence of Old-Fashioned Scorecards versus New Tech Models

We will explore more of the factors supporting segregation below; but in this section, it is worth pausing to consider some pros and cons about the rush to migrate to new modelling technologies.

Many of these new model building technologies are not radical departures from many of the analytic and statistical approaches that yielded traditional scorecards. The big advantage is the inclusion of so many technologies in modules with similar APIs in a technical ecosystem. The technical ecosystem will potentially improve productivity and provide implementation or execution as a natural extension of the model development components.

These technologies are not panaceas to produce “better” models, and there is still a lot of value in the standard scorecard. Some of the pros and cons of new tech models are as follows:

Pros

- possible improved predictive accuracy

- flexibility and adaptability

- more complex feature engineering

- more quickly developed and deployed

Cons

- possible neglect of operational realities

- complexity which makes interpretability more challenging

- harder to ensure accuracy, fairness, and regulatory compliance

- can lead more easily to the overfitting of data

Factors for Segregating Model Execution and Decision Engine

This temptation alluded to above reflects a failure to thoughtfully consider what other values are provided by the separate Decision Process. Here we consider the many factors that argue for separating model execution and decision engine.

Efficiency and Scalability

One of the primary reasons for segregating model execution and decisioning is efficiency. As suggested, the model execution (depending on the model technologies employed) might be computationally costly to execute. By being able to independently scale model execution and decision engine, computing resources can be more efficiently allocated.

Flexibility and Customisation

Separating model execution and decisioning also promotes flexibility and customiSation. For example, model segmentation can be configured inside the decision engine which can support calling out to different model instances depending on the segment or sub-population.

Different models or score types might be included within a decision process, for example, you might first be predicting credit risk (calling appropriate model endpoints) and then predicting response (calling those appropriate model endpoints).

A configurable decision engine enable credit grantors to define rules and other criteria independently of the underlying models that might be referenced during the decision process. This means that as business needs or requirements change or regulations morph, the decision making processes can be adjusted without the need for extensive model retraining or redevelopment.

A good decision engine will also support affordability driven facility allocations, champion/challenger testing, simulations, version control, rich user access control, and auditing.

Transparency and Interpretability

With a variety of model development technologies, the prediction-making process may be somewhat obscure. With an independent and segregated decision engine, it is possible to provide additional configured details. These help to make the prediction more transparent or interpretable to both the ultimate end consumer or to the regulatory agencies responsible for inspecting or enforcing fair credit laws, which grow ever more prevalent and stricter around the world.

Summary

The practice of segregating model execution and decisioning, by serving models via service endpoints and employing a configurable decision engine, is a prudent and advantageous strategy for organiSations leveraging AI and machine learning. It enhances efficiency, transparency, flexibility, security, and compliance, all of which are essential factors for successful AI implementation.

As we continue to rely on AI and ML models to drive business decisions and optimise processes, embracing this segregation approach is a step towards responsible and effective AI deployment, ensuring that the technology serves the best interests of both credit grantors and society at large.

Credit issuers still need a collaborative team of contributors who understand the business domain, the data, and the tools for analysis and model building. Segregation of modelling and decisioning frees up both sides of the collaborative team to focus on problem areas where their respective skill sets promise the most productive outcomes.

About the Author

Michael Lorenat is the CTO of ADEPT Decisions and has held a wide range of roles in software, the financial services, and the credit risk industry since 1980.

About ADEPT Decisions

We disrupt the status quo in the lending industry by providing fintechs with customer decisioning, credit risk consulting and advanced analytics to level the playing field, promote financial inclusion and support a new generation of financial products.